Depth perception can be achieved by a variety of 3D sensors ranging from stereo vision with cameras to

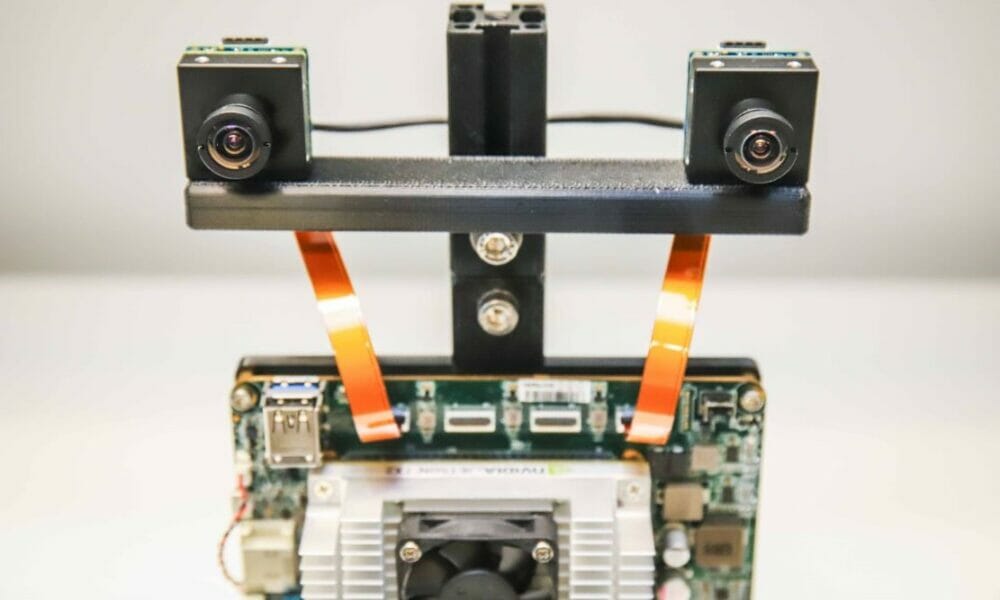

lidar and ToF sensors, each with its own strengths and weaknesses. While several off-the-shelf options exist, a custom embedded solution can cater to specific application requirements better. Our embedded depth perception solution provides a high-resolution colour point cloud using custom stereo setup with cameras. Depending on factors such as accuracy, baseline, field-of-view, and resolution, such a solution can be built using relatively inexpensive components and open-source software.

In this article, machine vision engineers at Teledyne FLIR share an overview of stereo vision while taking you through all the necessary steps to design your own depth perception solution. The article also covers pros and cons of 2 design options depending on space and computational requirements: with detailed steps for building, calibration and real-time depth mapping using one of the options. The setup does this without a host computer and uses off-the-shelf hardware and open-source software options. Read along to learn more, the article covers:

• A simplified overview of a stereo vision system

• How machine vision cameras can be used to build a depth perception solution

• Two design options depending on your space and computational requirements

• Step-by-step guide with sample code for building one of the options

• Off-the-shelf hardware and open-source software required to build the system

• Steps for calibration and real time depth mapping

• Unique features, ease of use and integration enabled by Spinnaker SDK